When real-time travel information becomes essential, accessibility can’t be an afterthought—it has to work through at least two senses. Edenspiekermann is currently developing a new signage and information system for Hamburg’s upcoming U5 line. Much of the information is communicated visually, so one crucial question was how to make it equally accessible for blind and visually impaired people.

To comply with the 2-Sense principle (visual + audio), an additional physical touchpoint was required: the “Anforderungsknopf” — a request button that translates real-time travel information into clear, reliable audio.

The goal wasn’t just to translate existing information into audio. It was to build a dedicated audio solution from scratch — an interaction that is unmistakable, trustworthy, and robust, especially for blind and visually impaired people who rely on non-visual feedback. The same approach applies to many public-facing, safety-relevant interfaces — elevators, intercoms, ticketing, wayfinding, and in-vehicle interactions — where clarity and trust matter most.

What we do: fast, evidence-based prototyping for accessible public infrastructure

We help mobility, infrastructure, and product teams validate accessible interactions quickly — across digital and physical touchpoints. By combining modern AI-supported prototyping with real-world hardware tests, we compress decision cycles, reduce risk, and build stakeholder confidence without relying on long, descriptive explanations.

We knew early on this would require hardware prototyping — this wasn’t something we could solve just on a screen. The real questions were physical, spatial, and systemic: Where does the button sit? How is it positioned within the station? How do you structure and convey information so it’s immediately understandable and quick to access? What kind of menu logic does it require?

What we delivered: an audio interaction concept, multiple artifacts, and a hardware prototype for stakeholder alignment

A rapid prototyping sprint designed to align stakeholders through tangible evidence rather than interpretation

An audio-only interaction concept with a clear information structure that supports the 2-Sense principle

Multiple prototype artifacts, including a usable hardware prototype, to test behavior and comprehension in real-world contexts (without overbuilding too early)

Three iterations: from flow logic to content details to a usable hardware prototype

In accessibility work, speed is not about cutting corners — it’s about learning quickly without putting people at risk. So we built fast, tangible prototypes that stakeholders could actually experience, not just review in a slide deck.

To move quickly, we structured the work in clear phases — each increasing fidelity and answering a different set of questions:

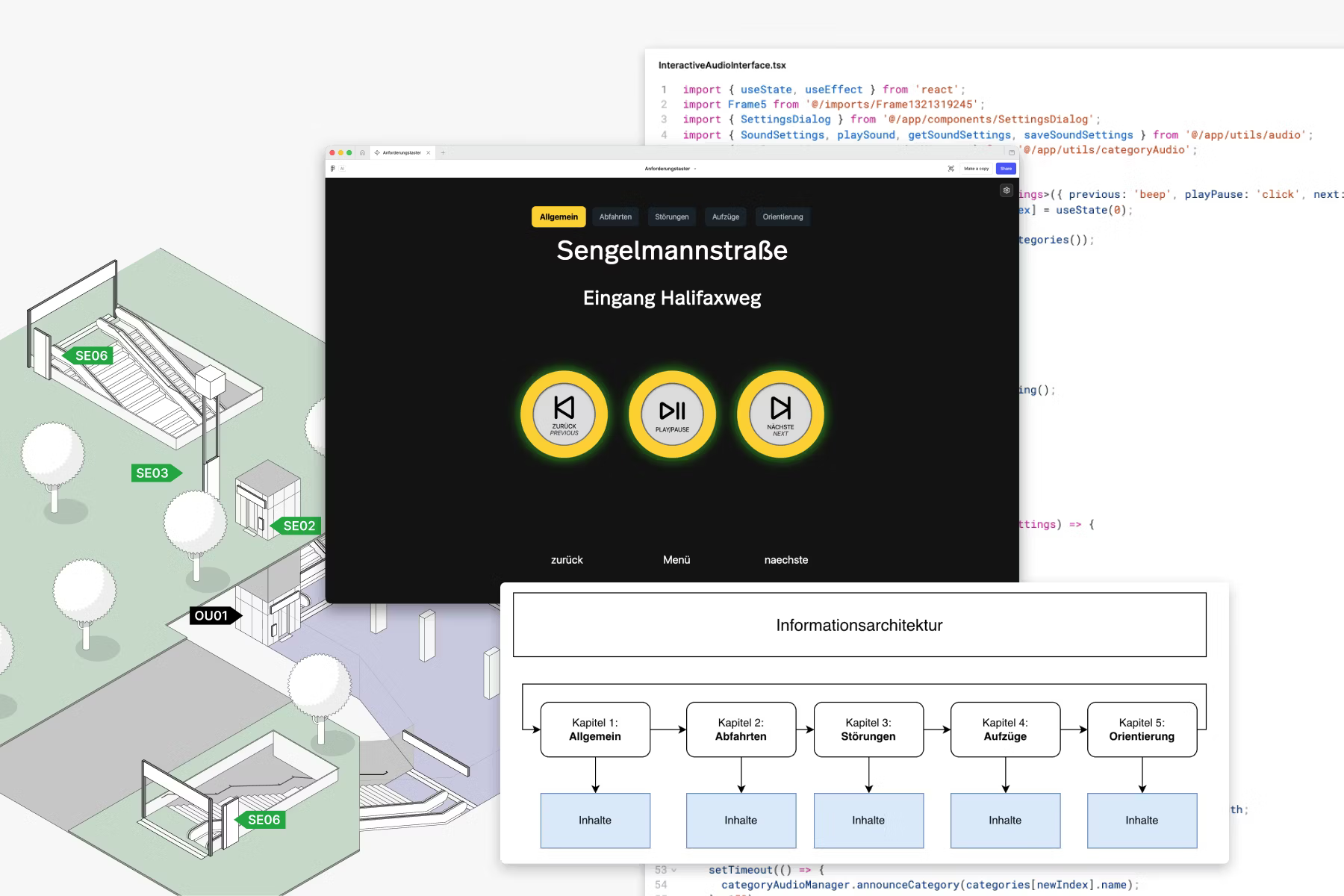

1. Proof of Concept in Figma Make: validate navigation and content structure

We started with Figma Make to quickly test menu structure, user flows, and interaction logic—so we could align early on what the experience should do, before debating details.

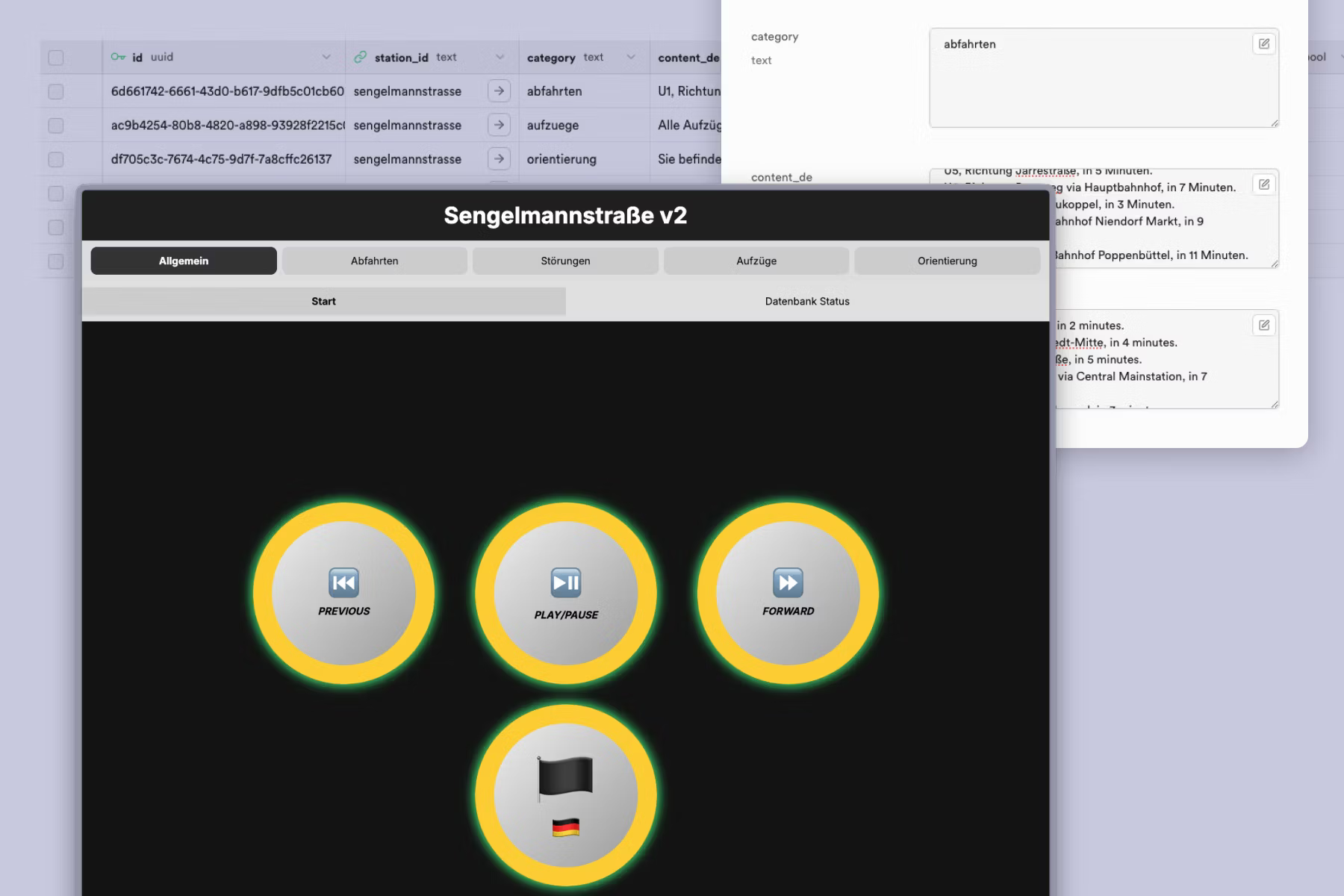

2. Concept Development with Vercel v0: define sound, voice, and verify scalability

Next, we moved into Vercel v0 to refine content questions — length, tone, multi language support and required sounds — so we could validate what the audio experience should feel like across different station types and locations along the journey.

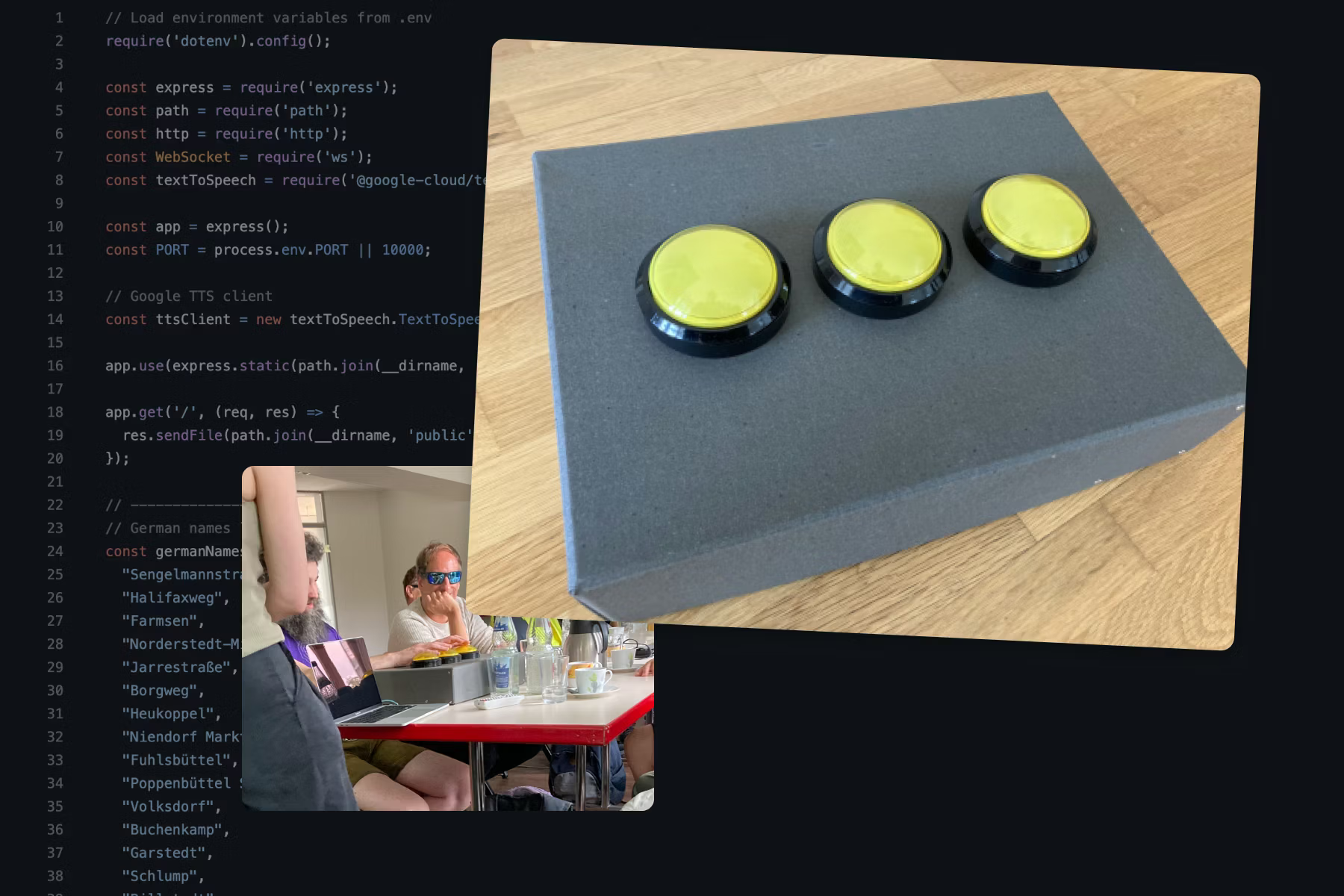

3. Real-World Behavior with Arduino: make it physical and test it with the target group

Finally, we used Arduino to make the prototype physically experienceable — so we could discuss and validate it directly with blind users and stakeholders using an interaction they could actually try, not just imagine.

This mix let us compress cycles from “weeks of discussion” into “hours of seeing and deciding.” Within a 3 week prototyping sprint, we moved through multiple iterations, including testing with blind users and several rounds of feedback with external stakeholders — allowing us to validate decisions quickly and responsibly.

Why this approach works: accessibility drives the requirements, hardware needs early proof, and prototypes align stakeholders faster than debate

Our collaboration with Hamburger Hochbahn revealed three key insights that shaped the outcome:

Accessibility isn’t a layer — it’s the core requirement

The 2-Sense principle isn’t a checkbox. It changes feedback, language, timing, and placement. If the experience only “works” visually, it doesn’t work.Hardware needs earlier proof than software

With physical interfaces, late changes are expensive — and often impossible at scale. Prototyping early wasn’t optional; it was risk management.Stakeholders align faster when they can experience the interaction

The moment someone can press a button and hear the response, the conversation shifts from opinions to evidence.

Designing for blind people changes the procedure: listening tests, comprehension checks, and hands-on feedback replace visual reviews

Because the topic is sensitive, we were careful not to treat accessibility as a “story” or a marketing angle. Instead, we focused on respectful, practical questions:

Does the interaction reduce uncertainty?

Is the feedback immediate and unambiguous?

Does it work across station contexts without requiring explanation?

Does it preserve autonomy — especially in moments where people already feel exposed?

Each iteration answered a small set of questions, and each artifact made decision-making easier — without revealing more project detail than necessary.

The wow moment: a usable prototype turned long explanations into shared understanding — and accelerated decisions

What made this work especially strong was the shift from describing the experience to letting people try it. When stakeholders and the target audience could press a real button and hear it work, uncertainty dropped and alignment became much easier — because everyone discussed the same thing: a usable interaction, not an interpretation.