Article

Building Amaphiko

In our last post about Amaphiko, Spiros covered the idea behind the platform, its goals and how we teamed up with Red Bull to make it a reality. After working on the project for almost a year we want to share more about its technical side.

What does a social network need?

Building a social network is a complex task. There are a lot of things people expect from such a service, for example profiles, notifications, events, messaging, groups and a newsfeed. On top of that, Amaphiko has special functionalities like a graphical editor to create project pages or articles.

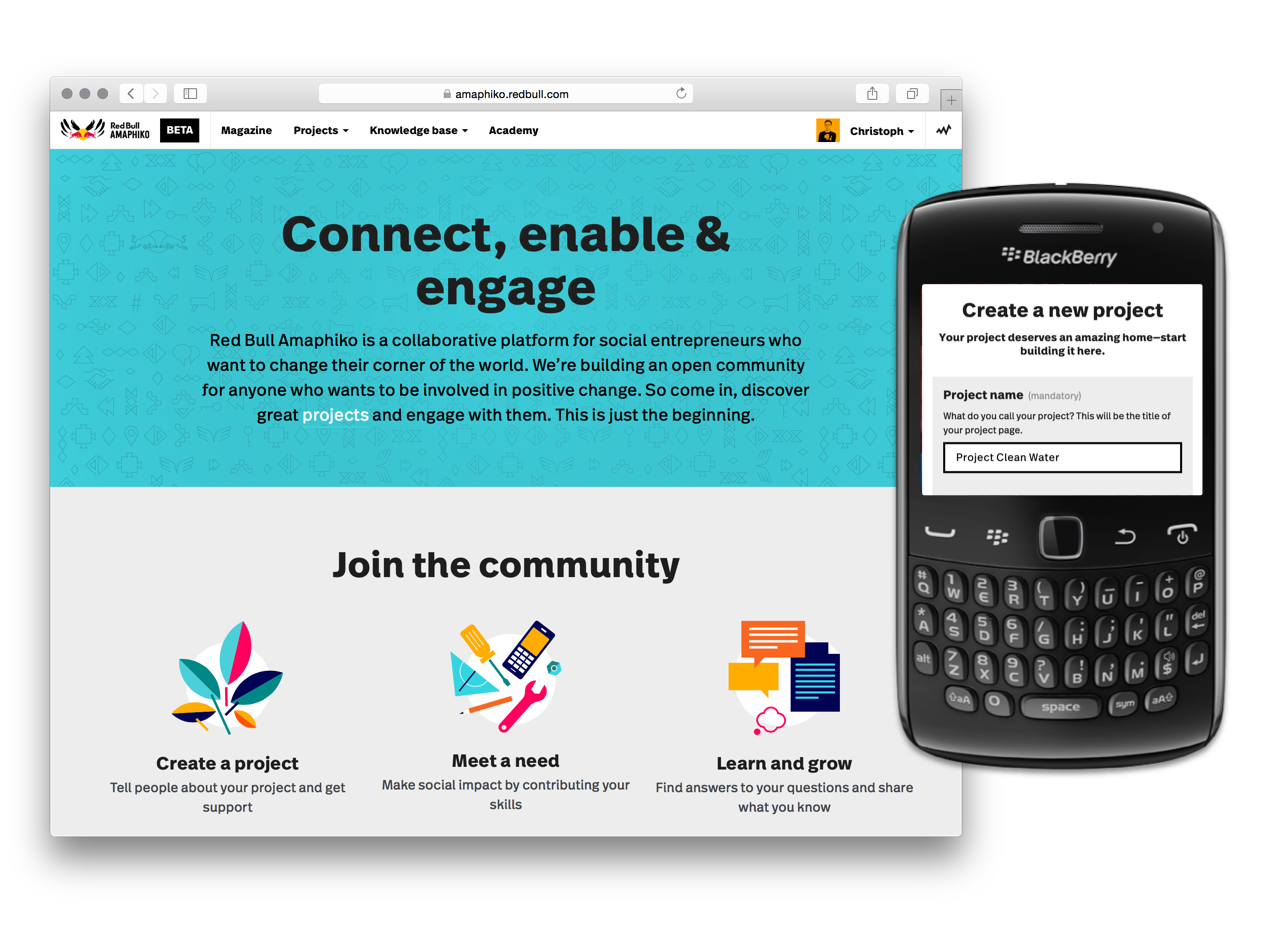

Besides implementing all of these features we had to make them work in regions where internet connections are slow and people use low-end devices to browse the web. Nevertheless these requirements should not lower the user experience for people with high-end devices and broadband connections.

Testing and settling for the right solutions

With these requirements in mind we searched for technical solutions that support server-side rendering, progressive enhancement on a per-feature level and could share as much code as possible on the server and browsers.

A year ago the possible options for us were Backbone with Rendr, React and Mercury. For evaluation purposes we built a small example application in each of these frameworks, that rendered the first page load on the server, converted the site to a single page application and progressively enhanced a component as soon as JavaScript loaded.

Although React’s ecosystem was not as mature as it is today, we went with it for multiple reasons. A: React’s component model makes feature-based progressive enhancement easy. B: “react-router” already had most features we needed including server-side rendering. And C: React is built and used by Facebook and we were to build a social network as well.

Amaphiko’s Architecture

As most of our backend developers have a lot of knowledge in Ruby and Rails we went with a two server architecture. The API server is a Rails application that powers the business logic, sending mails and background jobs. The node.js server requests data from the API and renders the page.

Node.js: The node.js server is a small “express” application with basic middleware and routing is done by “react-router”. 99 percent of our application code is shared between server and browsers. The only difference is the entry file of both environments as they get their initial state differently.

JavaScript: We can decide when to use server- or client-side rendering by using our custom SyncLink component or react-router’s Link. JavaScript, fonts and images are treated as an enhancement and loaded asynchronously after page load. To allow optional image loading we built a custom responsive image solution. Every feature works without JavaScript and we do progressive enhancement on a component level. Our whole codebase is written in ES2015 (ES6) using Babel and JSX.

CSS: Component styles are prefixed with the component’s unique name, for example CommentForm lives in comment-form/index.jsx and its class names are prefixed with .comment-form. Components never override styles of other components. To ensure this encapsulation we also built a command line tool that warns us if we broke it unintentionally.

Red Bull Amaphiko was built to work on desktop machines as well as feature phones with slow internet connection.

Red Bull Amaphiko was built to work on desktop machines as well as feature phones with slow internet connection.

Out-of-the-box project setup

The setup of our project is intentionally simple. After pulling the frontend repository the only command to run is npm install.

Deploying to staging is as easy as npm run deploy, and deploying to production is done with npm run deploy -- production. Deploy tasks automatically push new or updated static assets to our CDN and update the URLs referencing them accordingly.

npm run watch starts the local development environment with “webpack-dev-server” and “react-hot-loader” for JS/DOM updates without refreshing the browser or losing the current application state. CSS updates are also injected using livereload. Additionally we have some commands for managing translations.

Keeping the quality level up

I wrote a tool that runs npm scripts for certain git hooks. This allows us to lint changed files before committing. We run integration tests before pushing to GitHub, and they request most of Amaphiko’s routes using “supertest”. They check response codes to make sure that the server is able to render the page without error. Runtime errors are hooked up to an error logging service that sends us mails as soon as something breaks.

Release early, release often

Everyone is allowed and encouraged to push to production. We release early and often (multiple times a day). Sometimes we secretly release features on production but don’t link to them to see if they break something.

New features are developed on separate branches and merged with a pull request. We do code reviews before merging those to our “develop” branch. This branch is the latest stable state of the application and can be deployed at any point in time.

We develop using the “staging” version of our API so that we immediately know if any backend change has broken something.

A look into the future

We recently switched to io.js in production, which had a positive impact on the memory consumption. The two server setup architecture helped a lot to decrease the time to setup a project and increased productivity on both sides. It also requires more communication between backend and frontend developers though when building a new feature that affects both environments. We are looking forward to try new technologies like Relay or Falcor that seem to solve these kind of problems well.

Our biggest performance bottleneck at the moment is the latency between our node.js server and the API server. Both environments should live on the same server, so that these requests resolve instantly. We are looking into using Docker to make this possible and already spent some time during our Maker Days to set some other projects up with it.

CSS modules could help us to automate our style encapsulation solution via strict naming conventions. How to use CSS modules in a universal JavaScript context is not yet completely solved, though and requires an additional build step for the server.

In conclusion we are very happy with the decisions we made. Eric even said it’s the cleanest codebase he has ever worked with. Writing modular and encapsulated components with React enabled us to add new features and scale while keeping code quality high.

Want to know more about something mentioned in the article? I’m happy to answer any questions on our “Ask Me Anything” GitHub repository and feel free to send me a mail or message on twitter.